Security continues to be an overriding concern for a vast array of embedded systems in the Internet of Things (IoT). If infiltrated, systems buried in the electrical grid, power generation, manufacturing, automotive systems, medical devices, building management, gas pumps, toasters and much more, can be a significant risk, Mark Pitchford of LDRA reports.

Fortunately, with security, the old adage “an ounce of prevention is worth a pound of cure” is certainly true, and the basis of the much newer “shift left” buzz phrase! Engineering foresight helps build secure systems at lower cost than reactive testing later. In fact, since building secure software has much in common with building functionally safe applications, secure software development starts by following functional safety processes.

Consider these best practices to help produce high-quality code and improve the security of embedded systems:

1. Build security into the software development life cycle

Traditional secure code verification is largely reactive. Code is developed in accordance with relatively loose guidelines, then tested to identify vulnerabilities. Whether using agile development or a traditional development lifecycle model, there is a more proactive approach to designed-in security.

With traditional development, requirements flow to design, to code (perhaps via a model), and to tests. With agile development, requirements are built up iteratively in layers from the inside out, each with its own loop of requirements, design, code, and test. With either methodology, ensuring that security requirements are an integral part of the development process will lead to a far more satisfactory outcome than merely looking for vulnerabilities at the end.

2. Ensure bidirectional traceability

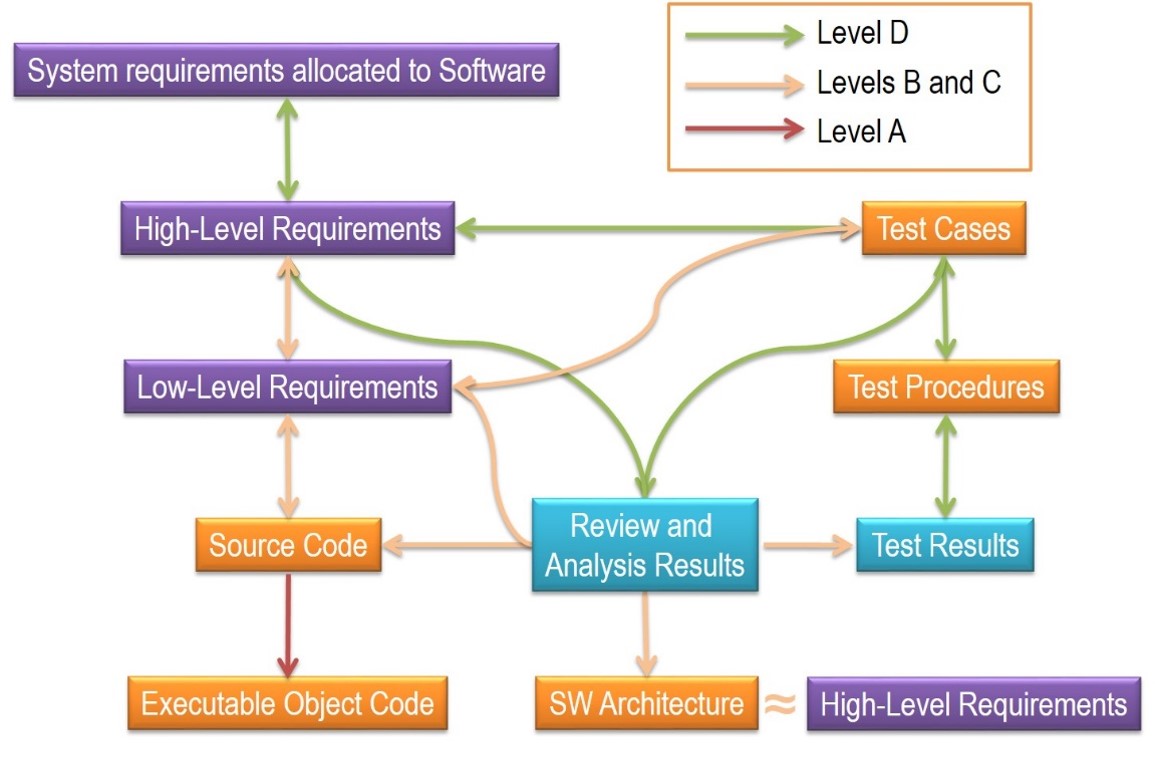

Most functional safety standards require evidence of bidirectional traceability confirmation of complete and thorough coverage between development phases between all stages of development from requirements through design, code, and test. With such transparency, the impact of any changes to requirements or of failed test cases can be assessed with impact analysis and then addressed.

Artifacts can be automatically re-generated to present evidence of continued compliance to the appropriate standard. Where security is paramount, bidirectional traceability also ensures that there is no redundant code, or unspecified functionality and that includes backdoor methods. These advantages underline the value of systematic development to increase the ability to build secure systems.

3. Choose a secure language subset

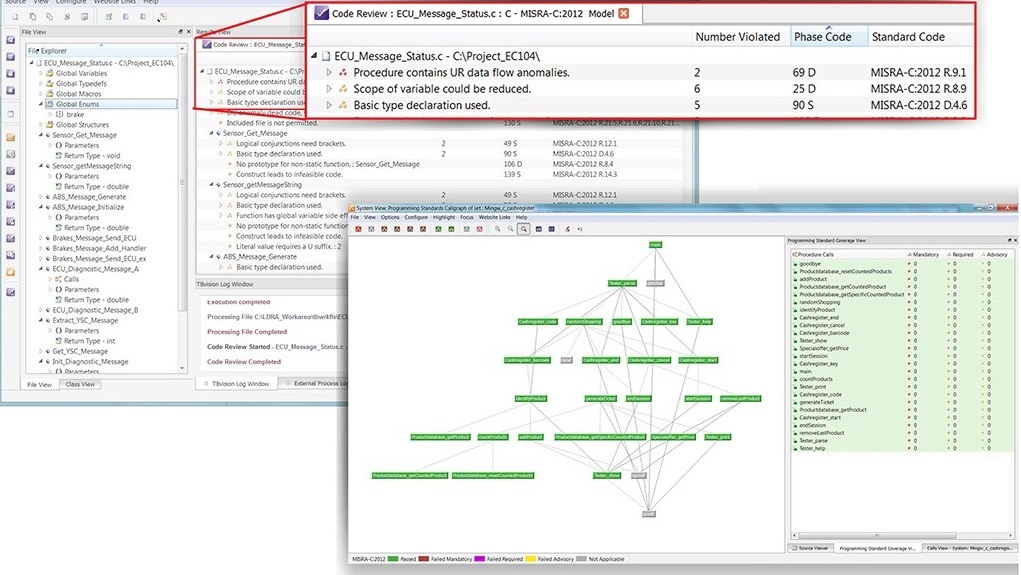

When developing with C or C++, about 80% of software defects can be attributed to the incorrect usage of 20% of the language constructs. Language subsets improve both safety and security by preventing or flagging the use of unsecure constructs. Two popular coding standards, MISRA C and Carnegie Mellon Software Engineering Institute (SEI) CERT C, help developers produce secure code.

Applying either MISRA C or CERT C will result in more secure code than if neither were applied. However, manually enforcing these guidelines comes at the price of time, effort, money, and ironically quality, as the manual process is complex and error-prone. To reduce costs and improve productivity, development organisations need to automate support for compliance.

4. Use a security-focused process standard

Security standards provide another piece of the secure development solution, although security standards aren’t as well developed and time tested as functional safety standards, which have had decades of use. This will change, however, as industry-specific security standards are developed.

The auto industry, for example, is currently developing ISO/SAE 21434 “Road vehicles Cybersecurity engineering” to mitigate the problem of connected vehicles as targets for cyber-attacks. The promise of a substantial document with more detail than the high-level guiding principles of SAE J3061 “Cybersecurity Guidebook for Cyber-Physical Vehicle Systems,” makes ISO/SAE 21434 widely anticipated.

5. Automate as much as possible

At each stage of the software development process, automation reduces vulnerabilities in embedded systems and saves huge amounts of time and cost. Developers can plug into requirements tools (e.g., IBM Rational DOORS), import simulation and modelling constructs, and test these against the code to see at a glance how and if the requirements are fulfilled, what’s missing, and where is dead code that doesn’t fulfill a requirement.

A static analysis engine can check compliance to coding standards and functional safety and security standards. With automation and secure design, reactive tests such as penetration testing have a place, but their role is to confirm that the code is secure not to find out where it isn’t.

6. Select a secure software foundation

Secure embedded software must run on a secure platform, so if an application is attacked, it’s running in its own silo, isolated from other software components (“domain separation”). Automated software test and verification tools integrate into common development environments from requirements specification through design and modelling through coding and documentation. This ensures that developers can build secure systems in a familiar environment and with the tools already specified for their system.

Following these six processes provides a cohesive approach to developing embedded software for the IoT that is safe, secure and reliable.

The author is Mark Pitchford of LDRA.

Comment on this article below or via Twitter: @IoTNow_OR @jcIoTnow

Leave a Reply